Google-owned YouTube, which has been under fire for allowing unsafe kids content to flourish on its network, apologized Monday for yet another snafu in its search results.

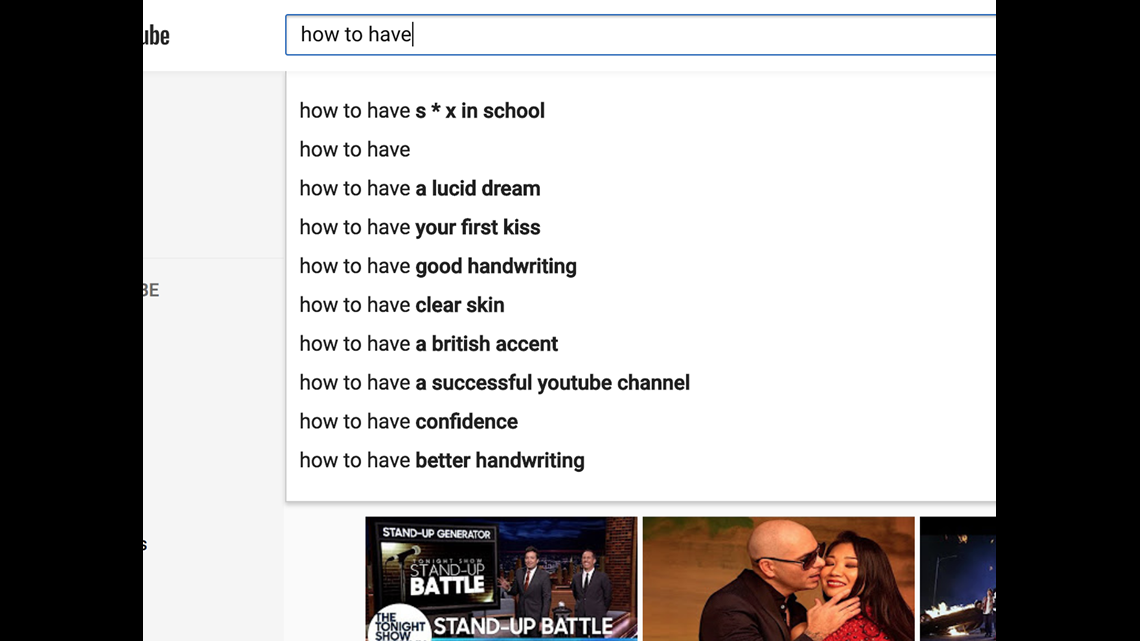

The website BuzzFeed reported Monday that YouTube's search algorithm was autocompleting phrases like "How to have..." with phrases like "s*x with children."

In a statement late Monday, Google said: "Earlier today our teams were alerted to this profoundly disturbing autocomplete result and we worked to quickly remove it as soon as we were made aware. We are investigating this matter to determine what was behind the appearance of this autocompletion."

What Google wouldn't address was how it started, how widespread it was, and whether the autocomplete results were still in operation.

Kids programming on YouTube has turned into the most popular genre on the world's most-viewed video network. According to website Tubular Labs, four of the top 5 most- viewed YouTube channels in the United States are devoted to kids and toys.

YouTube rewards people who make and submit videos to the network with a 55% share of the ad revenues generated, ratcheting up the incentive to bring in views with content that taps into niche tastes, isn't freely availably elsewhere — and skirts the YouTube algorithm's controls.

Some savvy online producers have figured out ways to pair kids content with violence, sex and other topics parents would shudder over. Over the past weeks, several critics have pointed out that YouTube has turned into a potential parent's nightmare, both in videos shown and in the no-holds barred comments section.

After U.K. newspaper The Times published a report on how pedophiles were commenting on videos of kids in baths or in bed, advertisers including Mars and Adidas yanked their ads from the network.

Google said Monday that's it's removed 270 accounts that had made "predatory comments on videos featuring minors," and turned off comments on 625,000 videos that were "targeted by child predators." Additionally, it removed ads from nearly 2 million videos and over 50,000 channels "masquerading as family-friendly content."

YouTube is a cash cow for YouTube, but the largely automated process that offers users a continuous stream of content they might like and pairs ads with videos has shown to have severe deficiencies when it comes to harmful content.

In March, the video site encountered advertiser pullouts when their advertisements were found running on content from extremist and hate groups. YouTube responded by adding warnings to extremist videos and prevented comments on them as a way to make the videos harder to find.

In August, YouTube said its combination of improved machine learning and bolstered staff of human experts has helped the site remove extremist and terrorist content more quickly.

But the problems still exist, notably on content targeted at kids or showcasing kids in the videos. Josh Cohen, the co-founder of Tube Filter, a website devoted to chronicling online video, says YouTube responded quicker to its latest snafu than it has in the past.

"By the end of the year, this stuff will be harder to find on YouTube," he says. "It won’t be all gone. But this is an ongoing, sustained effort for YouTube."

Follow USA TODAY's Jefferson Graham on Twitter, @jeffersongraham